Weeknotes: intellectual debt, identity, puppies

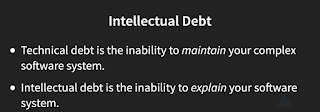

A highlight this week was Neil Lawrence's lecture on Intellectual debt and the death of the programmer. Neil has generously shared his slides and notes online, so I'll just highlight some of the aspects that struck me.

He started by introducing the AI fallacy, that somehow AI is different from other forms of automation and will adapt to us, rather than us adapting to it.

In the section on technical debt, the mythical man month, etc, I was amused to note that Neil called out Amazon's fabled "two pizza team" as American cultural imperialism. The problem arising from the separation of concerns and specialisation of teams is that no one is concerned with the whole system. It's not that software is vastly more complex than, say, building a bridge, but it's harder to assess progress.

Lots of people are concerned with the explainability of individual machine learning models now, but Neil's worry is about the way models are deployed and interconnected. Once a model is deployed, the developers lose control of it, and it's used by unexpected groups in unexpected ways, probably way outside the original design spec, and adding a great deal of uncertainty and risk. The data changes over time; the original training data may be stale, and the assumptions you made about the data may be wrong, or become wrong. Because freshly minted machine learning engineers arrive out of universities to earn astounding amounts of money, no one questions them, and it's assumed the models they create will all be fine and will keep working as they did on day one.

It's perhaps less of a concern for carefully tested and scoped hardware projects such as drones, but for large scale software intellectual debt is a real problem. Neil is now working on how machine learning systems can be better at the Computer Lab (see his slide on Milan).

He mentioned a few examples where the AI fallacy is already playing out. One is the idea from the national statistician that we might not need to keep doing a census, because there will be lots of data from other sources. Neil pointed out that we actually need more classical statistics than ever, to verify all this machine learning and data. He calls this the “Big Data Paradox” - that as we measure more about society, we understand less. We need to be able to sanity check our large complex systems - the census is still valuable.

Another example is Facebook and election influence.

On our current trajectory, the future will not be people controlled by a smart system running the world, but that we are all slaves of stupid, broken data systems.

It was a great lecture, spanning engineering, social science and computing, and left me inspired to think about the Festival of Maintenance programme in these areas...

I'd somehow missed Zittrain's 2019 article Intellectual Debt: With Great Power Comes Great Ignorance, which Neil references.

Replacing middle management with APIs, via Adrian McEwen (and from 2015!)

What happened to Softbank's huge investment in pizza delivery robot trucks? Not much, it turns out, in this amusing note about really big venture investment and the people who take it.

STEM's bait and switch. Is this true of other professions at all?

A call for interest which has netted responses from a great many fascinating people:

Sign the petition for the right to repair smartphones.

Lots of interesting bits in this Aeon essay on technologies, complex systems, collaboration and empathy.

Enspiral is a fascinating group, and I've always got a lot out of conversations and events with Enspiral people. They've commissioned a report into how it's going, and there's a summary post:

Thanks Lilian Edwards for the idea of 'medium talk':

(and the linked Wired article calling for small talk to be banned is good too). The lowRISC engineering team were particularly good at medium talk, which I'll miss.

There's a lot of good ideas in Basecamp's "how to communicate" (HT Alix Trot). Slower, deeper communication, through long form writing and consideration; although I read this and wonder how I'd feel about it if writing my thoughts out wasn't something I find comes naturally, and which always seemed to help me thrive in remote organisations.

SOS Sahel International, an NGO, is closing down - because it has completed its mission. Designing for this sort of thing - and being ready to shut up shop rather than staying around for the sake of it - is still very rare. It's good to see an example in practice.

Privacy International write to Google to highlight a structural failing in the Play Store / phone manufacturers system. These structural issues are so critical because they affect people unavoidably at scale:

Ross Anderson reports from Financial Cryptography 2020, starting with a terrific overview of all the challenges with the way we handle identity security:

Thread about how online searches (and algorithmic matches) are being used - to ridiculous and worrying effect.

John Havens from the IEEE's Global Initiative on Ethics of Autonomous & Intelligent Systems

has produced a report calling for AI to be designed with climate change in mind - "Measure what matters in the Era of Global Warming and the Age of Algorithmic Promises.” The full report is here

Dan Hon develops the idea of connected devices and internet systems ideally being as smart as a puppy (a nice level of intelligence), to as loyal as a puppy, which is a nice way to describe how products should be designed:

See also this 2018 CogX talk about machine systems as companion animals, via Sentiers. "The reason I mentioned puppies and dogs is [in] thinking about this as

non-human companion species. There’s been a lot written about companion

species, relationships, and thinking about the notion of the working

animals that we’ve co-evolved with, to sort of extend ourselves and work

with, rather than replacing us."

Heather Burns gave a talk about Wordpress as an example of an open source project which has not engaged with today's big open web debates. Who would engage with policy makers? How would they represent the project?

Photos of Dutch agricultural landscapes - large scale farming. The carefully controlled conditions remind me of a recent conversation about agri-tech innovations which tend towards hydroponics, soil-free growing etc. In an era where we are just learning about the importance of microbiomes in all kinds of aspects of health, we might be moving towards a food system where soil biomes are less accessible.

Where have equipment schematics gone? Why did they go away? An example from Tek Scopes.

Via Projects by IF, the new (sea) otter emoji. Feels like this could be useful for lots of things. 🦦

Lovely public domain biodiversity heritage content!

He started by introducing the AI fallacy, that somehow AI is different from other forms of automation and will adapt to us, rather than us adapting to it.

In the section on technical debt, the mythical man month, etc, I was amused to note that Neil called out Amazon's fabled "two pizza team" as American cultural imperialism. The problem arising from the separation of concerns and specialisation of teams is that no one is concerned with the whole system. It's not that software is vastly more complex than, say, building a bridge, but it's harder to assess progress.

| ||

| http://inverseprobability.com/talks/notes/intellectual-debt-and-the-death-of-the-programmer.html |

It's perhaps less of a concern for carefully tested and scoped hardware projects such as drones, but for large scale software intellectual debt is a real problem. Neil is now working on how machine learning systems can be better at the Computer Lab (see his slide on Milan).

He mentioned a few examples where the AI fallacy is already playing out. One is the idea from the national statistician that we might not need to keep doing a census, because there will be lots of data from other sources. Neil pointed out that we actually need more classical statistics than ever, to verify all this machine learning and data. He calls this the “Big Data Paradox” - that as we measure more about society, we understand less. We need to be able to sanity check our large complex systems - the census is still valuable.

Another example is Facebook and election influence.

Shortly after the 2016 US election Mark Zuckerberg was quoted as saying “To think it influenced the election in any way is a pretty crazy idea”. He has since revisited that conclusion. But was it a conspiracy when he suggested that it hadn’t happened? A conspiracy we could deal with. The deeper and more real problem is that Zuckerberg’s lack of understanding was due to the significant intellectual debt integrated into his vast software ecosystem. It took a further 10 months for Facebook to acknowledge the presence and influence of a coordinated Russian-based influence operation had infiltrated the site.Finally, he looked at accounting - another field, like data science, where the whole profession believes in the quality of its data. The recent accounting scandals highlight how the big four auditors may not do great audits, possibly because consultancy for the same companies pays so well. If we can't trust today's fairly straightforward money data to provide uncontestable ground truths - if, in fact, accounting - a regulated profession! - is all about interpretation and presentation, how can we trust data scientists?

The key question here is, if Facebook can’t understand when their own systems are under attack, then how can OfCom, the UK regulator expect to be able to understand when Facebook’s systems are breaking UK law?

On our current trajectory, the future will not be people controlled by a smart system running the world, but that we are all slaves of stupid, broken data systems.

It was a great lecture, spanning engineering, social science and computing, and left me inspired to think about the Festival of Maintenance programme in these areas...

I'd somehow missed Zittrain's 2019 article Intellectual Debt: With Great Power Comes Great Ignorance, which Neil references.

This kind of discovery — answers first, explanations later — I call “intellectual debt.” We gain insight into what works without knowing why it works. We can put that insight to use immediately, and then tell ourselves we’ll figure out the details later. Sometimes we pay off the debt quickly; sometimes, as with aspirin, it takes a century; and sometimes we never pay it off at all.

Be they of money or ideas, loans can offer great leverage. ...Indebtedness also carries risks. For intellectual debt, these risks can be quite profound, both because we are borrowing as a society, rather than individually, and because new technologies of artificial intelligence — specifically, machine learning — are bringing the old model of drug discovery to a seemingly unlimited number of new areas of inquiry. Humanity’s intellectual credit line is undergoing an extraordinary, unasked-for bump up in its limit.

Why is unpaid intellectual debt worrisome? First, when we don’t know how something works, it becomes hard to predict how well it will adjust to unusual situations.Zittrain notes that not all debt is equal - the stakes vary across different machine learning applications, and suggests action:

...

Charmed by its ready-to-hand predictive power, we will embed machine learning — like the asbestos of yesteryear — into larger systems, and forget about it. ...Intellectual debt entails a trade-off for vulnerability that is easy to drift into just the way that technical debt does.

....

There is a second reason to worry as AI’s intellectual debt piles up: the coming pervasiveness of machine learning models. Taken in isolation, oracular answers can generate consistently helpful results. But these systems won’t stay in isolation. As AI systems gather and ingest the world’s data, they’ll produce data of their own — much of which will be taken up by still other AI systems.

....

A third, and most profound, issue with intellectual debt is the prospect that it represents a larger movement from basic science towards applied technology, one that threatens to either transform academia’s investigative rigors or bypass them entirely. ...Industry may be perfectly satisfied with answers that lack theory. But ... intellectual debt will build in societal pockets far away from the academics who would be most interested in backfilling the theory. And an obsession only with answers — represented by a shift in public funding of research to orient around them — can in turn steer even pure academics away from paying off the intellectual debt they might find in the world, and instead towards borrowing more.

...

A world of knowledge without understanding becomes, to those of us living in it, a world without discernible cause and effect, and thus a world where we might become dependent on our own digital concierges to tell us what to do and when. It’s a world where home insurance rates could rise or fall by the hour or the minute as new risks are accurately predicted for a given neighborhood or home. The only way to make sense of that world might be to employ our own AIs to try to best position us for success with renter’s insurance AIs (“today’s a good day to stay home”); hiring AIs (“consider wearing blue”), or admissions AIs (“volunteer at an animal shelter instead of a homeless shelter”), each taking and processing inputs in inscrutable ways.

...

When we have a theory, we get advanced warning of trouble when the theory stops working well. We are called to come up with a new theory. Without the theory, we lose the autonomy that comes from knowing what we don’t know.

...we should invest in a collective intellectual debt balance sheet. ...

We must keep track of just where we’ve plugged in the answers of an alien system, rather than tossing crumpled IOUs into a file cabinet that could come due without our noticing.

Replacing middle management with APIs, via Adrian McEwen (and from 2015!)

In the long run there’s always something for people to work on and improve, but the introduction of this software layer makes we worry about mid-term employment 5-20 years out. Drivers are opting into a dichotomous workforce: the worker bees below the software layer have no opportunity for on-the-job training that advances their career, and compassionate social connections don’t pierce the software layer either. The skills they develop in driving are not an investment in their future. Once you introduce the software layer between “management” (Uber’s full-time employees building the app and computer systems) and the human workers below the software layer (Uber’s drivers, Instacart’s delivery people), there’s no obvious path upwards. In fact, there’s a massive gap and no systems in place to bridge it.Feels like another piece in the bullshit white collar jobs puzzle.

What happened to Softbank's huge investment in pizza delivery robot trucks? Not much, it turns out, in this amusing note about really big venture investment and the people who take it.

STEM's bait and switch. Is this true of other professions at all?

|

| https://twitter.com/STS_News/status/1228330842621202432 |

|

| https://twitter.com/sedyst/status/1227506955478011904 |

Sign the petition for the right to repair smartphones.

Lots of interesting bits in this Aeon essay on technologies, complex systems, collaboration and empathy.

Enspiral is a fascinating group, and I've always got a lot out of conversations and events with Enspiral people. They've commissioned a report into how it's going, and there's a summary post:

Enspiral defies simple explanation. It is many things to many people at once. It is a mutual aid network. It is a social impact entrepreneurial incubator. It is a future of work and new economy catalyst. It is a collection of channels on different mediums punctuated by meet-ups and retreats. It is a network of colleagues and friends.Also a shout out to Liminal, another interesting collective, where I've recently joined the Slack community. I love the guiding lights of authenticity, accountability and action detailed in Roland Harwood's recent update too.

...

People at Enspiral believe in a paradigm shift in how we all think about work and social impact, and they’re trying to create utopia and prepare for collapse at the same time. Much of the work that an organization like Enspiral does is intangible and personal, defying the logics of traditional qualitative and quantitative methodologies in its subtle approach to transformative subversion.

...

In a traditional organization, an impact report starts with the mission. That mission breaks down into different goals that can be evaluated against in some form. Since Enspiral is an “ecosystem of purposes,” rather than a traditional non-governmental organization or social impact venture, making sense of how Enspiral is contributing to the social change it envisions is difficult. Enspiral is not just building one better world. Enspiral is trying to help nurture a range of experiments all with their own unique, and even contradictory, theories of change.

Thanks Lilian Edwards for the idea of 'medium talk':

|

| https://twitter.com/lilianedwards/status/1227022696917225473 |

(and the linked Wired article calling for small talk to be banned is good too). The lowRISC engineering team were particularly good at medium talk, which I'll miss.

There's a lot of good ideas in Basecamp's "how to communicate" (HT Alix Trot). Slower, deeper communication, through long form writing and consideration; although I read this and wonder how I'd feel about it if writing my thoughts out wasn't something I find comes naturally, and which always seemed to help me thrive in remote organisations.

SOS Sahel International, an NGO, is closing down - because it has completed its mission. Designing for this sort of thing - and being ready to shut up shop rather than staying around for the sake of it - is still very rare. It's good to see an example in practice.

Privacy International write to Google to highlight a structural failing in the Play Store / phone manufacturers system. These structural issues are so critical because they affect people unavoidably at scale:

Android Partners - who use the Android trademark and branding - are manufacturing devices that contain pre-installed apps that cannot be deleted (often known as "bloatware"), which can leave users vulnerable to their data being collected, shared and exposed without their knowledge or consent.

These phones carry the "Google Play Protect" branding, but research shows that 91% of pre-installed apps do not appear in Google Play – Google’s app store.

These pre-installed apps can have privileged custom permissions that let them operate outside the Android security model. This means permissions can be defined by the app - including access to the microphone, camera and location - without triggering the standard Android security prompts. Users are therefore completely in the dark about these serious intrusions.

We are concerned that this leaves users vulnerable to the exploitative business practices of cheap smartphone manufacturers around the world.

Ross Anderson reports from Financial Cryptography 2020, starting with a terrific overview of all the challenges with the way we handle identity security:

In a world where even Jack Dorsey got his twitter hacked via incoming SMS, what is identity? Your thief becomes you. Abuse of old-fashioned passports was rare as they were protected by law; now they’re your email address (which you got by lying to an ad-driven website) and phone number (which gets taken away and given to a random person if you don’t pay your bill). If lucky you might have a signing key (generated on a general purpose computer, and hard to revoke – that’s what bitcoin theft is often about). The whole underlying system is wrong. Email domains, like phone numbers, lapse if you forget to pay your bill; fraudsters actively look for custom domains and check if yours has lapsed, while relying parties mostly don’t. Privacy regulations in most countries prevent you from looking up names from phone numbers; many have phone numbers owned by their employers. Your email address can be frozen or removed because of spam if you’re bad or are hacked, while even felons are not deprived of their names. Evolution is not an intelligent process! People audit password length but rarely the password reset policy: many use zero-factor auth, meaning information that’s sort-of public like your SSN. In Twitter you reset your password then message customer support asking them to remove two-factor, and they do, so long as you can log on! This is a business necessity as too many people lose their phone or second factor, so this customer-support backdoor will never be properly closed. Many bitcoin exchanges have no probation period, whether mandatory or customer option. SIM swap means account theft so long as phone number enables password reset – she also calls this zero-factor authentication.Why does Google (and Bing) use reddit as a source of facts for search result fact boxes? A great thread with some Google responses, thanks Nathan Matias.

SIM swap is targeted, unlike most password-stuffing attacks, and compromises people who comply with all the security rules. Allison tried hard to protect herself against this fraud but mostly couldn’t as the phone carrier is the target. This can involve data breaches at the carrier, insider involvement and the customer service back door. Email domain abuse is similar; domain registrars are hacked or taken over. Again, the assumptions made about the underlying infrastructure are wrong. Your email can be reset by your phone number and vice versa. Your private key can be stolen via your cloud backups. Both identity vendors and verifiers rely on unvetted third parties; vendors can’t notify verifiers of a hack. The system failure is highlighted by the existence of criminal markets in identity.

Thread about how online searches (and algorithmic matches) are being used - to ridiculous and worrying effect.

John Havens from the IEEE's Global Initiative on Ethics of Autonomous & Intelligent Systems

has produced a report calling for AI to be designed with climate change in mind - "Measure what matters in the Era of Global Warming and the Age of Algorithmic Promises.” The full report is here

Dan Hon develops the idea of connected devices and internet systems ideally being as smart as a puppy (a nice level of intelligence), to as loyal as a puppy, which is a nice way to describe how products should be designed:

|

| https://twitter.com/hondanhon/status/1228379758422978561 |

Heather Burns gave a talk about Wordpress as an example of an open source project which has not engaged with today's big open web debates. Who would engage with policy makers? How would they represent the project?

“We live in times where dreams can be fulfilled and nightmares can come true very quickly.”

We build the WordPress software to fulfil dreams, and it does. The software we build is also used to make nightmares come true, very quickly.

We have chosen not to speak up for ourselves when our project has been misused. We have chosen not to speak up for our users when their dreams risked turning into nightmares.

We have so little time left.

We are always told we should contribute to WordPress. We are always told we should give something to the project. We’ve done that. We’ve got it to 35% [market share]. We’ve won.

We’ve transformed the web. Now it’s time to save it.

It’s time for WordPress to stop telling us to contribute, and for WordPress to start contributing back.

Our legacy to the world, as contributors and as a project, will not be our code, or our blocks, or our market share. It will be whether we choose to show up to what lies ahead, and what impact we make. The open web needs us, as a community, as a project, and as a movement, for the people who use the tools we build along the way.

Photos of Dutch agricultural landscapes - large scale farming. The carefully controlled conditions remind me of a recent conversation about agri-tech innovations which tend towards hydroponics, soil-free growing etc. In an era where we are just learning about the importance of microbiomes in all kinds of aspects of health, we might be moving towards a food system where soil biomes are less accessible.

Where have equipment schematics gone? Why did they go away? An example from Tek Scopes.

Via Projects by IF, the new (sea) otter emoji. Feels like this could be useful for lots of things. 🦦

Lovely public domain biodiversity heritage content!

.. the world’s largest open access digital archive dedicated to life on Earth, the Biodiversity Heritage Library is comprised of animal sketches, historical diagrams, botanical studies, and various scientific research collected from hundreds of thousands of journals and libraries around the globe. In an effort to share information and promote collaboration to combat the ongoing climate crisis, the site boasts a collection of more than 55 million pages of literature, some of which dates back to the 15th century.

|

| https://www.flickr.com/photos/biodivlibrary/49468558886/in/datetaken/ |