Weeknotes: interdisciplinary ethical AI, food+environment, public assets, misc tech bits

A real highlight this week was a talk by Luke Church and Sharath Srinivasan of Africa's Voices Foundation. It is a rare delight to see a genuinely interdisciplinary presentation, combining computer science and social science perspectives - and to have speakers recognise that the audience likely understood terms such as 'integrity' very differently.

Both speakers brought critical reflections on their own fields. Luke spoke of the moral toxicity of the tech industry; Sharath of how, to scale social research, practitioners shift the interpretive burden to others, 'instrument' research subjects, or abstract away the inescapable role of interpretation, and take data as fact. Social science methods are political, material, self-interested and pragmatic. On the other hand, tech and data methods (at least as practised by most of the tech industry) are covertly political, essentialist, capitalist. Such methods seek to reduce or clean reality into formalisms; and then reify the formalised representation. They take the world, simplify it, claim the simplification is the essence of the world, and then we wield it back. Reality becomes what can be expressed to a computer. (And this is rather tough, as computer scientists know well that computers understand nothing.)

I appreciated the concept of the 'death of social sciences' - now everyone is a data collector, an analyst, a researcher. Everyone is an expert, or no one is; or perhaps only 'the kings of computation' are.

With these critical perspectives, we can imagine an interdisciplinary frame which could take the worst of both worlds. You could place a lower burden on research subjects through surveillance and datafication, relieve the interpretative burden by scaling pattern detection, and become complicit in how knowledge construction like this shapes resource allocation and power. (This is to some extent how much of the humanitarian sector is thinking about data technologies - they are amassing data points, and hoping that magic will happen so that this will enable good things for their beneficiaries.)

Luckily, AVF does not do this :) Instead, Luke and Sharath talked about interdisciplinary work to create a common social accountability platform, which maximises the chance that citizen perspectives are heard in decisions that affect their lives. Today's humanitarian and development surveys are shaped by the sector, programme or mandate involved - so a local resident may be asked today to talk about resilience, tomorrow to talk about durable solutions (both humanitarian sector terms), because of the structures and politics of the aid organisations. The AVF vision of citizen engagement and evidence for strengthening social accountability is about connecting people with authorities in ways that make sense to them. They are finding that this has value - their platform was used in Mogadishu and it turned out people thought crowdfunding (broadly understood) was a good way to tackle some issues, but no aid organisation working in, say, shelter or livelihoods would have been able to ask an open question which would have found this idea.

They use local radio combined with SMS or other messaging. Engaging citizens on their own terms as social agents carries burdens - you have to go where they are, curate collective discussion they value - and so this sort of method has a very different cost to extractive data collection and surveillance. If you do it well, you get lots of messy textual data, which you need to understand. AVF use complex mixed methods to give this material expression to influence decision-makers. It's not just a conventional technical solution, because "data science senses voices, it doesn't listen to them".

The AVF perspective on ethical use of AI is that interpretation of data matters, and should be done by people; that it's about augmenting, not automating labour; and that technologies should be designed for doubt, and for curiosity. It is better, therefore, to get people to do overt interpretation of opinion information (the messy text data), than to have machines doing covert politics with it. So machine learning/AI is used to augment the interpretative capacities of human researchers, with explicit representation of structural and value uncertainty. Provenance tracing is used to maintain authenticity. The AI helps the data processing to be both human, and to work at larger scale than would be possible without it, supporting the human work of research analysis.

The result is that AVF are using Ai to help build engagement spaces, with rigorous and timely qualitative evidence for interpretative insight by humans for decisions that matter at scale. These spaces are justified by people who feel agency over the decisions which affect them, as well as valuing the discussion itself. The research creates a different sense of social-embodied knowing, where we feel and understand differently because human authenticity is retained.

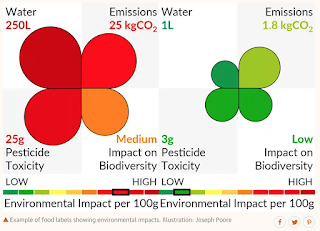

Joseph Poore's talk about food in the Cambridge Climate Lecture series was exceptional. You can watch it online. He made a very compelling case for an environmental impact label to be compulsory for food, and why that was the critical lever in a systemic change to how we produce, process and consume food. His arguments were not just about carbon, but a broader consideration of biodiversity and pollution. Land use was another critical angle - land not used for food can be reused for planting trees to act as a carbon sink. (His Science paper is available on his website.)

The talk was great - full of startling facts, great charts and graphics, and carefully assembling his case. His research was basically a systemic review, looking at impacts from deforestation through to consumer packaging waste, and involved getting more data from the authors of previous studies for deeper analysis. Amazingly, it was unfunded work - and came about, in essence, because he had underestimated the work required. It seemed like the evidence was clear to start a policy conversation, but Poore called for more work first on some aspects - particularly metrics for biodiversity needed to design a useful label.

I would have liked to have taken better notes, and captured some of the key slides - especially the ones showing the range of environmental impact within single food types. Some of the standout facts from my notes:

Last week I linked to Michael Feldstein's article about hype in educational technologies. He has a follow up out now, touching on some of the reasons this happens. There's an interesting angle of how, if you are well-intentioned and genuinely want to do good in the world, a combination of vision and external growth enablers/pressures (such as venture capital funding) can lead to a path which actually doesn't deliver the vision after all.

There's something similar about how it is actually harder to be a "social good" venture, or a "purpose+profit" startup, than a straightforward "just for-profit" one. If you are just going after profit, within the law, you can see what good looks like and you can pursue it. You can adopt popular playbooks without much thought; your whole team know what metrics matter without needing to stop and think. If you are also trying to create some other value, it's likely harder to measure, so you are mixing up the easy finance metrics (because you still need to pay salaries etc) with hard, poorly-defined goals around whatever your mission is. You may also be trying to do stuff 'right' in a broader sense - paying above the minimum wage, say, or not using 'dark patterns' to encourage customers to buy more or accidentally tell their friends about your product - which actively reduce your financial performance in the near term. That means founders and teams must make hard choices without clear guidance, in murky 'grey areas,' every day. Plus you are held to higher standards in a social good venture - any sort of slip, on any area where you might be expected to do better, can lead to criticism. It's tough. (and, incidentally, one of the things we want to address at the Impact Union - where we'll have more updates soon - and at Doteveryone, in different ways).

A good rant about the bits of our infrastructure that fall between the cracks - in this case, pallets, which have been in the news because of Brexit, but perhaps should be in the news as an example of efficiency and outsourcing and avoided responsibility leading to problems for small businesses:

This theme is echoed by Chenoe Hart in Real Life magazine, looking at the future of shipping and robotic delivery, the branding on containers of goods (or not, for generics), the need (or not) for storage.

And then there's the waste problem if we have a no deal Brexit.

A terrific (and depressing) investigation from The Bureau of Investigative Journalism about public assets being sold by local authorities in the UK. In the past, selling an asset such as a building could only be done to fund the purchase of a new public asset, but now councils can sell in order to fund immediate cost-cutting measures. The amounts spent on redundancies, for instance, are astonishing. But the loss of public amenities, especially in the poorest parts of the country, is the real issue.

In more positive news, Preston is kickstarting a more co-operative economy.

I have mixed feelings about Nicholas Negroponte's work, but his post this week about the "big ideas famine" struck a chord:

Scott Weingart does a deep dive tracing information sources online. It does not make him happy.

A good (long) introduction (with lots of examples), setting out different examples of social problem solving practice. I liked that the GDS (Government Digital Service) style, which seems to be called "digital" as a practice in some communities, is described as "UX" (user experience) which feels closer to familiar and useful language to me.

A great Twitter thread detailing the challenges with virtual reality and augmented reality systems (especially the ones where you wear a headset and wave your arms)

A set of discussions about public-interest technologists from Bruce Schneier's mini-track at the RSA Conference. RSA probably means very different things to people who might be reading this :) I am very interested in where public-interest technologists work and how their labour is resourced. In all the tech regulation discussions there are calls for more tech people to work at regulators, but we'd be horrified at the idea of public sector salaries anywhere close to what Google et al pay.

Hillary Predko in The Prepared with a useful reminder about shell games in the distributed ledger space:

Nick Hunn reflects on Mobile World Congress, which this year was all about 5G, except still no one knows what this is or why you'd really need it. Nick's thoughts on IoT, and whether or not anything has really moved on since the early 2000s, are worth a read. He also has a clear sense of how 2019 is different from 2018:

A view sadly affirmed by friends who were at MWC this year and reported both human and pet toilet innovation activity. Overall, though, wireless audio is where it's at according to Nick:

Email lists have great archiving, because so many different mailboxes will have copies. Joe Hughes pointed me at LOCKSS, a peer to peer system for digital preservation.

A very useful find - https://perma.cc/

A NEW EXHIBITION SHOWING THE TIME IS NOW OR THE FUTURE IS NEVER." Anyway, it's not very many minutes to midnight at the moment.

Pascal Finette on owning your mistakes and how to handle the awkward situation where you've been misquoting something for ages.

Low tech websites can be quite energy efficient. Low-Tech Magazine has a feature on how to build one. (I love the magazine's tagline: Doubts on progress and technology.

Dave Birch has a great point in his post about AI and blockchain, which is that writing software is an obvious field where computers will do better than people in many areas. I've felt that we may not be helping when we tell people that basic coding, the sort of thing you get in bootcamps, may provide a guaranteed job for life. It is probably fine now, but it seems an obvious target for automation.

I wrote up a recent talk about tech regulation separately. This week also saw a new report from Nesta about renewing regulation, and balancing regulation and innovation.

Did you know how a text message moves from one phone to another? It's quite complicated - Scott Weingart (again) tells the story down to the bit-level in this Motherboard feature. A useful reminder of all the bits (ha) and pieces which make the internet useful, and of the engineering, standards, and labour required to make it thus.

Both speakers brought critical reflections on their own fields. Luke spoke of the moral toxicity of the tech industry; Sharath of how, to scale social research, practitioners shift the interpretive burden to others, 'instrument' research subjects, or abstract away the inescapable role of interpretation, and take data as fact. Social science methods are political, material, self-interested and pragmatic. On the other hand, tech and data methods (at least as practised by most of the tech industry) are covertly political, essentialist, capitalist. Such methods seek to reduce or clean reality into formalisms; and then reify the formalised representation. They take the world, simplify it, claim the simplification is the essence of the world, and then we wield it back. Reality becomes what can be expressed to a computer. (And this is rather tough, as computer scientists know well that computers understand nothing.)

I appreciated the concept of the 'death of social sciences' - now everyone is a data collector, an analyst, a researcher. Everyone is an expert, or no one is; or perhaps only 'the kings of computation' are.

With these critical perspectives, we can imagine an interdisciplinary frame which could take the worst of both worlds. You could place a lower burden on research subjects through surveillance and datafication, relieve the interpretative burden by scaling pattern detection, and become complicit in how knowledge construction like this shapes resource allocation and power. (This is to some extent how much of the humanitarian sector is thinking about data technologies - they are amassing data points, and hoping that magic will happen so that this will enable good things for their beneficiaries.)

Luckily, AVF does not do this :) Instead, Luke and Sharath talked about interdisciplinary work to create a common social accountability platform, which maximises the chance that citizen perspectives are heard in decisions that affect their lives. Today's humanitarian and development surveys are shaped by the sector, programme or mandate involved - so a local resident may be asked today to talk about resilience, tomorrow to talk about durable solutions (both humanitarian sector terms), because of the structures and politics of the aid organisations. The AVF vision of citizen engagement and evidence for strengthening social accountability is about connecting people with authorities in ways that make sense to them. They are finding that this has value - their platform was used in Mogadishu and it turned out people thought crowdfunding (broadly understood) was a good way to tackle some issues, but no aid organisation working in, say, shelter or livelihoods would have been able to ask an open question which would have found this idea.

They use local radio combined with SMS or other messaging. Engaging citizens on their own terms as social agents carries burdens - you have to go where they are, curate collective discussion they value - and so this sort of method has a very different cost to extractive data collection and surveillance. If you do it well, you get lots of messy textual data, which you need to understand. AVF use complex mixed methods to give this material expression to influence decision-makers. It's not just a conventional technical solution, because "data science senses voices, it doesn't listen to them".

The AVF perspective on ethical use of AI is that interpretation of data matters, and should be done by people; that it's about augmenting, not automating labour; and that technologies should be designed for doubt, and for curiosity. It is better, therefore, to get people to do overt interpretation of opinion information (the messy text data), than to have machines doing covert politics with it. So machine learning/AI is used to augment the interpretative capacities of human researchers, with explicit representation of structural and value uncertainty. Provenance tracing is used to maintain authenticity. The AI helps the data processing to be both human, and to work at larger scale than would be possible without it, supporting the human work of research analysis.

The result is that AVF are using Ai to help build engagement spaces, with rigorous and timely qualitative evidence for interpretative insight by humans for decisions that matter at scale. These spaces are justified by people who feel agency over the decisions which affect them, as well as valuing the discussion itself. The research creates a different sense of social-embodied knowing, where we feel and understand differently because human authenticity is retained.

Joseph Poore's talk about food in the Cambridge Climate Lecture series was exceptional. You can watch it online. He made a very compelling case for an environmental impact label to be compulsory for food, and why that was the critical lever in a systemic change to how we produce, process and consume food. His arguments were not just about carbon, but a broader consideration of biodiversity and pollution. Land use was another critical angle - land not used for food can be reused for planting trees to act as a carbon sink. (His Science paper is available on his website.)

The talk was great - full of startling facts, great charts and graphics, and carefully assembling his case. His research was basically a systemic review, looking at impacts from deforestation through to consumer packaging waste, and involved getting more data from the authors of previous studies for deeper analysis. Amazingly, it was unfunded work - and came about, in essence, because he had underestimated the work required. It seemed like the evidence was clear to start a policy conversation, but Poore called for more work first on some aspects - particularly metrics for biodiversity needed to design a useful label.

I would have liked to have taken better notes, and captured some of the key slides - especially the ones showing the range of environmental impact within single food types. Some of the standout facts from my notes:

- since 1950, the global CO2 level has had 99% correlation with global human population

- over 50% land area is agriculture - plants, pasture, ranging grounds

- food systems produce 26% of greenhouse emissions, cause 1/3 of the total acid rain and 78% of eutrophication.

- reducing the environmental impact of food is tougher than for sectors like transport, because there are millions of small food producers, rather than the handful of global conglomerates which dominate other sectors (and which can more easily be targeted by policy changes)

- methane release from farmed aquaculture can be worse than from cows

- there is a huge variation in environmental impact within some crops (eg rice or tomatoes), even in similar growing regions there can be up to five times variation (when weighted per calorie of food)

- smaller farm areas and organic and permaculture methods had lower impacts

Poore's recommendations were really three-fold, but with the labels as key lever - farmers to use digital tech to monitor environmental impacts; policy to set targets on the impacts not on practice; and consumer incentives via labels. The need for data is critical in an opaque sector, where both farmers and policy makers struggle to know what to do; mandatory labels would drive the use of technology to gather that information. Interestingly although he presented a scenario where we stop consuming animal products, a 100% vegan model, he also suggested that a 50% reduction in animal product consumption and a focus on reducing the highest impact production (of both animal and plant foods) could achieve good environmental impact too. Proteins are an area where consumers make choices and so giving them environmental info there could be especially effective.

There's a nice mockup of how the labels could be in this 2018 Guardian article:

|

| https://www.theguardian.com/environment/2018/oct/10/we-label-fridges-to-show-their-environmental-impact-why-not-food |

Last week I linked to Michael Feldstein's article about hype in educational technologies. He has a follow up out now, touching on some of the reasons this happens. There's an interesting angle of how, if you are well-intentioned and genuinely want to do good in the world, a combination of vision and external growth enablers/pressures (such as venture capital funding) can lead to a path which actually doesn't deliver the vision after all.

There's something similar about how it is actually harder to be a "social good" venture, or a "purpose+profit" startup, than a straightforward "just for-profit" one. If you are just going after profit, within the law, you can see what good looks like and you can pursue it. You can adopt popular playbooks without much thought; your whole team know what metrics matter without needing to stop and think. If you are also trying to create some other value, it's likely harder to measure, so you are mixing up the easy finance metrics (because you still need to pay salaries etc) with hard, poorly-defined goals around whatever your mission is. You may also be trying to do stuff 'right' in a broader sense - paying above the minimum wage, say, or not using 'dark patterns' to encourage customers to buy more or accidentally tell their friends about your product - which actively reduce your financial performance in the near term. That means founders and teams must make hard choices without clear guidance, in murky 'grey areas,' every day. Plus you are held to higher standards in a social good venture - any sort of slip, on any area where you might be expected to do better, can lead to criticism. It's tough. (and, incidentally, one of the things we want to address at the Impact Union - where we'll have more updates soon - and at Doteveryone, in different ways).

A good rant about the bits of our infrastructure that fall between the cracks - in this case, pallets, which have been in the news because of Brexit, but perhaps should be in the news as an example of efficiency and outsourcing and avoided responsibility leading to problems for small businesses:

This theme is echoed by Chenoe Hart in Real Life magazine, looking at the future of shipping and robotic delivery, the branding on containers of goods (or not, for generics), the need (or not) for storage.

And then there's the waste problem if we have a no deal Brexit.

A terrific (and depressing) investigation from The Bureau of Investigative Journalism about public assets being sold by local authorities in the UK. In the past, selling an asset such as a building could only be done to fund the purchase of a new public asset, but now councils can sell in order to fund immediate cost-cutting measures. The amounts spent on redundancies, for instance, are astonishing. But the loss of public amenities, especially in the poorest parts of the country, is the real issue.

Councils selling public land and buildings to fund cost-cutting measures - a policy called the “flexible use of capital receipts” - must in the government’s words, “demonstrate the highest standards of accountability and transparency.” This means producing annual reports detailing the amount of money being spent, the projects funded and the savings targets set.In a similar vein, Tony Hirst writes about the diminishing public realm in terms of land in housing developments. Tony's posts are always thoughtful, humble, and data-/evidence-driven, and explore questions from different angles, and this is no exception, looking at the loss of public roads and space, the removal of rights near your home, and the increase in total cost of ownership of property. His OUseful blog is one I've been reading and finding fascinating material in for many years - recommended.

.... Our investigation found those standards were not always being met, preventing proper scrutiny and denying the public the chance to properly debate the decisions about what is sold and what the money is used for.

Two thirds of councils are not fully adhering to the rules around the data they have to publish about the land and buildings they own, we found.

In more positive news, Preston is kickstarting a more co-operative economy.

I have mixed feelings about Nicholas Negroponte's work, but his post this week about the "big ideas famine" struck a chord:

I believe that 30 years from now people will look back at the beginning of our century and wonder what we were doing and thinking about big, hard, long-term problems, particularly those of basic research. They will read books and articles written by us in which we congratulate ourselves about being innovative. The self-portraits we paint today show a disruptive and creative society, characterized by entrepreneurship, start-ups and big company research advertised as moonshots.But we are not doing the things that would lead to actual solutions to big problems. Dynamics around R&D have changed in recent decades.

First, which successful company has benefited the most from basic science and technology, yet given the least back to it? The answer: Apple. ... yet there is a long history of precompetitive basic science that, for example, came from the likes of Bell Labs (like semi-conductors), later IBM, and more recently Microsoft. ...Big problems have solutions which

... can only be achieved by sharing, by working together, by pooling insights and by standing on each other’s shoulders. Government labs served some of these purposes before they were closed down, as did universities before their faculty became distracted by creating start-ups and IP.

Scott Weingart does a deep dive tracing information sources online. It does not make him happy.

It’s frustration at the fact that we write in an environment that affords full references and rich hyperlinking, and yet we so often revert to context-free tumblr-like reblogging which separates text from context and data. We’re reverting to the affordances of 18th century letters, 19th century newspapers, 20th century academic articles, etc., and it’s frustrating.Awareness of ethical codes may not affect the behaviour of tech developers - a 2018 paper.

A good (long) introduction (with lots of examples), setting out different examples of social problem solving practice. I liked that the GDS (Government Digital Service) style, which seems to be called "digital" as a practice in some communities, is described as "UX" (user experience) which feels closer to familiar and useful language to me.

A great Twitter thread detailing the challenges with virtual reality and augmented reality systems (especially the ones where you wear a headset and wave your arms)

A set of discussions about public-interest technologists from Bruce Schneier's mini-track at the RSA Conference. RSA probably means very different things to people who might be reading this :) I am very interested in where public-interest technologists work and how their labour is resourced. In all the tech regulation discussions there are calls for more tech people to work at regulators, but we'd be horrified at the idea of public sector salaries anywhere close to what Google et al pay.

Hillary Predko in The Prepared with a useful reminder about shell games in the distributed ledger space:

|

| https://theprepared.org/ |

Nick Hunn reflects on Mobile World Congress, which this year was all about 5G, except still no one knows what this is or why you'd really need it. Nick's thoughts on IoT, and whether or not anything has really moved on since the early 2000s, are worth a read. He also has a clear sense of how 2019 is different from 2018:

A view sadly affirmed by friends who were at MWC this year and reported both human and pet toilet innovation activity. Overall, though, wireless audio is where it's at according to Nick:

Despite the effort put in by the GSMA to lead the horse to water, giving it the sparkling option of 5G or the still waters of IoT, it seemed that all the horse wanted was a pair of noise-cancelling earbuds which would cut out the noise of the PR hype.

Email lists have great archiving, because so many different mailboxes will have copies. Joe Hughes pointed me at LOCKSS, a peer to peer system for digital preservation.

A very useful find - https://perma.cc/

Websites change. Perma Links don’t. Perma.cc helps scholars, journals, courts, and others create permanent records of the web sources they cite. Perma.cc is simple, easy to use, and is built and supported by libraries.There's a lovely art exhibition around the Alison Richard building in Cambridge at the moment, from the thoughtful folks at the Centre for Existential Risk. "GROUND ZERO EARTH:

A NEW EXHIBITION SHOWING THE TIME IS NOW OR THE FUTURE IS NEVER." Anyway, it's not very many minutes to midnight at the moment.

Pascal Finette on owning your mistakes and how to handle the awkward situation where you've been misquoting something for ages.

Low tech websites can be quite energy efficient. Low-Tech Magazine has a feature on how to build one. (I love the magazine's tagline: Doubts on progress and technology.

Dave Birch has a great point in his post about AI and blockchain, which is that writing software is an obvious field where computers will do better than people in many areas. I've felt that we may not be helping when we tell people that basic coding, the sort of thing you get in bootcamps, may provide a guaranteed job for life. It is probably fine now, but it seems an obvious target for automation.

Researchers from Oak Ridge National Laboratory in the US foresee AI taking over code creation from humans within a generation. They say that machines, rather than humans, “will write most of their own code by 2040”. As it happens, they’ve started already. AutoML was developed by Google as a solution to the lack of top-notch talent in AI programming. There aren’t enough cutting edge developers to keep up with demand, so the team came up with a machine learning software that can create self-learning code… Even scarier, AutoML is better at coding machine-learning systems than the researchers who made it.At last, a term for the interesting things that are not actually blockchains - post-blockchain. Of course. It's actually quite an incidental term in a substantial book-scale draft - "P2P Accounting for Planetary Survival" which also explores ideas such as Ostrom contracts to replace smart contracts. This may well be the reading I need to do to cohere my thoughts around a bunch of distributed system / ledger / economics topics, as it includes case studies of lots of things like Holochain, FairCoin, and ways to place value on natural resources, and accounting systems that consider externalities, and new organisational forms... So I look forward to actually reading it properly. Huge gratitude to Michel Bauwens, Alex Pazaitis and the other collaborators for assembling it all.

I wrote up a recent talk about tech regulation separately. This week also saw a new report from Nesta about renewing regulation, and balancing regulation and innovation.

In the past decade there have been two major global crises of regulation – the firstThe report includes suggestions about reframing the narrative of regulation, and the challenge of regulating as sector boundaries are eroded by information.

triggered by the 2008 financial crisis, the second by attacks on the integrity of the 2016 US Presidential election. Both are also crises of innovation, posing urgent questions about how we secure the benefits of innovation in ways that command public confidence and without courting unacceptable risks. They demonstrate that innovation, in particular the commercial deployment of innovation, is – or should be – at the centre of regulators’ concerns.

This is not just an issue about finance and social media. There are a range of emerging or maturing technologies – artificial intelligence, the internet of things, drones, gene editing and digital health services – that promise both great economic and social benefits, but also entail new kinds of risk. Each could evolve on many different trajectories, the most sustainable of which are likely to be the product of an interplay between public and private actors. Unhelpfully, public and political discussion about regulation has typically pivoted between theoretical (or theological) arguments about whether we should have ‘more’ or ‘less’ of it, and whether activity x or y should be regulated. Far less attention has been paid to the actual practice of regulators, in particular as it relates to innovation.

Did you know how a text message moves from one phone to another? It's quite complicated - Scott Weingart (again) tells the story down to the bit-level in this Motherboard feature. A useful reminder of all the bits (ha) and pieces which make the internet useful, and of the engineering, standards, and labour required to make it thus.