Weeknotes — diffusion, corporate culture, email

Mostly things I read the week before last.

I’m not a big fan of the idea that leaving Facebook is great advice, or a way to change the world if many of us did it, but it seems popular these days. It always feels like a very privileged position, to be able to step out of a social infrastructure which supports so many useful interactions for so many people. Local communities, volunteer groups, friends and family dispersed around the world — these are not things everyone can just give up without sacrifice. And so I was pleased to see Joanna Bryson writing about these important connections and the idea of perhaps nationalising it, setting out to fix it together, instead.

A paper from Farrell and Schneier about Common Knowledge attacks on democracy, proposing we take a cyber security approach when thinking about how information can be manipulated on a large scale. Seems a useful analogy, if only to encourage to a new mindset the technical folks who tend to jump to the idea that technology can fix all these social problems with ease, and without thinking through the underlying causes of problems at all.

Tom Forth discusses the relative wealth (in income terms) of Northern towns vs cities in this Twitter thread. Turns out, towns are usually better off than the cities they surround; and it’s all notably different to London.

Via The Prepared, this from the Economist:

The value of the average Japanese house depreciates to zero in 22 years, as most are knocked down and rebuilt on a shorter time horizon. This leads to interesting second-order effects, but makes less sense now that the country’s population is shrinking (Japan currently has 10 million abandoned homes).

Seems very different to the UK. I wonder how they compare in terms of sustainability (materials, embodied energy).

This Atlantic article is mostly a rant about computers and the internet ending up in inappropriate places — turning reliable, understandable objects into erratic, mysterious ones. Having worked with IOT I want rather less of it in my life than more; and less than I used to have, as fun, useful items such as the Nabaztag disappear, and more privacy-invading objects arrive on the market. I do worry that I may already be too late to update our household TV, if we want a new one which is not smart.

Even though it’s in these companies’ immediate business interest to solve abuse problems now, making online spaces safer is assumed to require a computational answer. Human content moderation is notoriously hard, admittedly. And the volume of content is so high, a matter Twitter emphasizes, that computational systems are needed to manage and operate them.

Why would anyone ever choose a solution that doesn’t involve computers, when computers are available? Propane tanks and bike locks are still edge cases, but ordinary digital services work similarly: The services people seek out are the ones that allow them to use computers to do things — from finding information to hailing a cab to ordering takeout. This is a feat of aesthetics as much as it is one of business. People choose computers as intermediaries for the sensual delight of using computers, not just as practical, efficient means for solving problems.

That’s how to understand the purpose of all those seemingly purposeless or broken services, apps, and internet-of-things devices: They place a computer where one was previously missing. They transform worldly experiences into experiences of computing. Instead of machines trying to convince humans that they are people, machines now hope to convince humans that they are really computers. It’s the Turing test flipped on its head.

Also a useful point about how scale, and the demand for efficient “zero marginal cost” growth, drives less human thinking:

But perhaps managing abuse is “a very, very hard challenge” largely on account of that assumption. The very idea of a global network of people able to interact with one another anonymously precludes efficient means of human intervention. Twitter’s answer assumes that their service, which is almost entirely automated by apps and servers, is perfectly fine — they just need to find the right method of computational management to build atop it. If computer automation is assumed to be the best or only answer, then of course only engineering solutions seem viable.

Again, the Atlantic, this time an interview with Arlie Hochschild who coined the term “emotional labour” and is now concerned about the concept creep, whereby the meaning is shifting from her original intent. It was helpful to me to separate mental labour (keeping track of tasks and making sure they get done) from emotional labour, whether that’s the stress of worrying about those tasks, or of managing your emotions to present the ‘appropriate’ face to the world or to colleagues, or indeed the stress of wondering whether the way you are acting is the best. The idea of some types of work becoming alienated, especially emotional or care-giving work, and the way tasks at work and at home are perceived, was also very interesting.

I also enjoyed Nathan Matias’s Twitter comment on this, and how academic ideas diffuse, or influence.

Diffusion came up a few times. At the first conference of the Cambridge Global Challenges Initiative, there was talk about the way new/clean cookstoves are adopted in communities, compared to the way rich world people adopt (or don’t) recycling. Why is recycling still so hard? Labels are confusing, local authority guidance varies widely; those who work at recycling still don’t always get it right, contaminating collected materials; many people don’t bother, although they are happy to reject plastic straws in a big way. We still don’t “reduce” or “reuse” much. There’s a collective action benefit if we do it, but it’s a change in our routines and maybe a little more effort up front. Perhaps not that dissimilar to cookstoves — where having cleaner stoves available does not guarantee uptake.

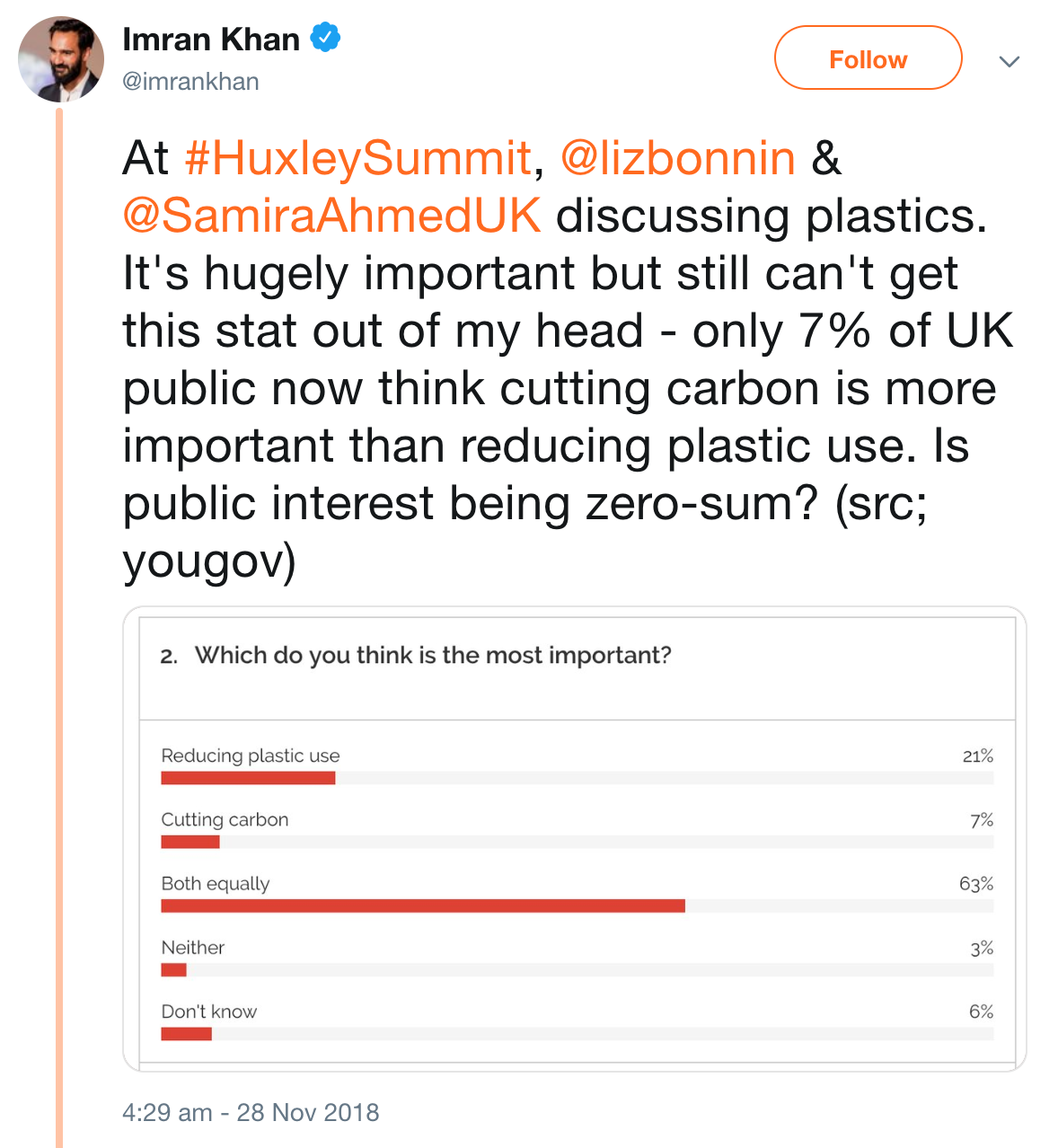

Imran Khan noted a similar, disturbing fact at the Huxley Summit:

This 2013 article from Ian Bogost also touches on types of work and how we feel about it:

Take email. A friend recently posed a question on Facebook: “Remember when email was fun?” It’s hard to think back that far. On Prodigy, maybe, or with UNIX mail or elm or pine via telnet. Email was silly then, a trifle. … The worst part of email was forwarded jokes, and even those seem charming in retrospect. Even junk mail is endearing when it’s novel.

Now, email is a pot constantly boiling over. Like King Sisyphus pushing his boulder, we read, respond, delete, delete, delete, only to find that even more messages have arrived whilst we were pruning. A whole time management industry has erupted around email, urging us to check only once or twice a day, to avoid checking email first thing in the morning, and so forth. Even if such techniques work, the idea that managing the communication for a job now requires its own self-help literature reeks of a foul new anguish.

……

It’s easy to see email as unwelcome obligations, but too rarely do we take that obligation to its logical if obvious conclusion: those obligations are increasingly akin to another job — or better, many other jobs. For those of us lucky enough to be employed, we’re really hyperemployed — committed to our usual jobs and many other jobs as well. It goes without saying that we’re not being paid for all these jobs, but pay is almost beside the point, because the real cost of hyperemployment is time. We are doing all those things others aren’t doing instead of all the things we are competent at doing. And if we fail to do them, whether through active resistance or simple overwhelm, we alone suffer for it: the schedules don’t get made, the paperwork doesn’t get mailed, the proposals don’t get printed, and on and on.

The article also talks about other forms of online communication, but let’s stick with email, still the staple of much work, home and academic life.

When so much goes through one channel, many different communication rhythms are lost. Some email is, in essence, close to chat — back and forth about some immediate goal, or a quick alert (about a changed meeting time today or tomorrow, say, or a cancelled evening class). Some is of near term relevance — a party this weekend, a week long sale at an online store. Not urgent, but good to see within a day or so. Some is far less urgent — a longer, slower, reflective correspondence. This mixture of speeds seems to me more confusing and stressful than the mixture of topics and types of email. We’ve loaded one system with what in previous decades would have been quick notes passed by hand, postcards, and letters — no wonder we struggle to manage it. Systems like Slack can’t replace email — they simply offer a system which is better for one specific rhythm and pace of communication. It seems easier to move the quick messages into chat systems, than to know what to do about messages that would benefit from a slower cadence.

Jonnie Penn writes about machine learning, and about corporations; machine learning is often just an advanced form of efficiency-seeking bureaucratisation:

Many contemporary AI systems do not so much mimic human thinking as they do the less imaginative minds of bureaucratic institutions; our machine-learning techniques are often programmed to achieve superhuman scale, speed and accuracy at the expense of human-level originality, ambition or morals.

David Runciman, a political scientist at the University of Cambridge, has argued that to understand AI, we must first understand how it operates within the capitalist system in which it is embedded. “Corporations are another form of artificial thinking-machine in that they are designed to be capable of taking decisions for themselves,” he explains.

“Many of the fears that people now have about the coming age of intelligent robots are the same ones they have had about corporations for hundreds of years,” says Mr Runciman. The worry is, these are systems we “never really learned how to control.”

The threat that Mr Runciman cautions against is that AI techniques, like playbooks for escaping corporate liability, will be used with impunity.

Jeni Tennison also wrote about AI and the recent CUKC event:

Quite a lot of debunking went on in the room. There were requests never to treat or talk about Sophia as AI; never to use the trolley problem as if it had anything to do with the choices autonomous cars would make; not to believe Babylon’s figures about triage accuracy; not to spread the falsehood that a sexbot was manhandled at an Australian trade fair; not to mischaracterise how DeepMind Health use patient data in Streams.

The trap we need to avoid is thinking any system (human or machine) is faultless.

All the AI practitioners urged caution and were concerned about hyperbole in the media narrative about AI. They pointed out that deep learning and reinforcement learning are only suitable for particular tasks and that much of the AI vision we are being fed requires techniques that haven’t been invented yet. There’s a danger that when the current wave of AI (machine learning) fails to meet high expectations we will enter another AI winter of reduced funding for research that slows progress again.

Although I cannot help but note that these same practitioners inevitably also benefit from and in some cases drive the hype…

My Cambridge colleague Jennifer Cobbe wrote about her frustrations with the way legal scholars engage with critical issues in today’s society. I’d touched on this in the closing panel at the Information Law and Policy Centre annual conference, based on my very limited experience; Jennifer helped me understand law more clearly through the following note:

…most lawyers are narrowly doctrinal in their thinking, but ultimately I see law as a kind of reflexive social construct (in that it’s created by society and has significant effects on society) so I’ve never understood how you can have any kind of meaningful or fulfilling engagement with law or the legal system without looking more broadly at society and what role law is playing within it.

What’s law, what’s ethics, what’s practice? There are many discussions about the relationship between tech ethics and law, and this article is a good overview of questions such as whether certifying ethics is meaningful or useful.

This article was enjoyable as rants so often are; ostensibility it’s a rant about design thinking, but it’s perhaps more a rant about the celebration of useless new things (wasteful projects that will never work) and how many high profile “innovators” focus on building new things simply because fixing or improving existing things is just too hard for them.

For sure, [design thinking] is commonsense tarted up . . . by design.

Design Thinking’s roots in consulting are instructive. As Margaret Brindle and Peter Stearns explain in their book, Facing Up to Management Faddism: A New Look at an Old Force, fads often enter organizations from outside in moments of perceived crisis, and the fads complete certain functions for the organizations’ leaders. First, they assuage leaders worries and uncertainties because this novelthing promises to solve their problems. Second, the fads legitimate the organization because it can show that it is keeping up with all the new, cool stuff out there. Third, fads enable leaders to show that they are doing something. And, finally, individuals get to champion this or that fad and, thus, build and advance their careers and win acclaim for being cutting-edge.

The early innovation studies thinker Everett Rogers used the term “laggard” in this way to refer to the last individuals to adopt new technologies. …neoliberalism sees established actors as self-serving agents who only look to maintain their turf and, thus, resist change.

This mindset is quite widespread among Silicon Valley leaders. It’s what led billionaire Ayn Rand fan Peter Thiel to put $1.7 million into The Seasteading Institute, an organization that, it says, “empowers people to build floating startup societies with innovative governance models.” Seasteaders want to build cities that would float around oceans, so they can escape existing governments and live in libertarian, free market paradise. It’s the same notion undergirding the Silicon Valley “startup accelerator” YCombinator’s plan to build entire cities from scratch because old ones are too hard to fix. Elon Musk pushes this view when he tweets things, like “Permits are harder than technology,” implying that the only thing in the way of his genius inventions are other human beings — laggards, no doubt. Individuals celebrated this ideological vision, which holds that existing organizations and rules are mere barriers to entrepreneurial action, when Uber-leader Travis Kalanick used a piece of software to break city laws. And then they were shocked, shocked, shocked when Kalanick turned out to be a total creep.

Now, if you have never been frustrated by bureaucracy, you have not lived. Moreover, when I was young, I often believed my elders were old and in the way. But once you grow up and start getting over yourself, you come to realize that other people have a lot to teach you, even when — especially when — they disagree with you.

I loved the idea of “crew neck capitalism” as a way of understanding recent corporate trends, especially (but not exclusively) in tech:

But it is not just the clothing that matters, rather the clothing is representative of an approach, an image and a set of ideals. The casual tone and familiarity permeates down into our interactions with these companies and platforms. This tone is peppered across the interactions and points of contact that we have with crew neck capitalism. It is highly likely that we have all experienced the causal tone and over familiarity of an app or software update message, or we’ve received messages of Christmas or birthday wishes from a company that doesn’t know us, or we have faced the constant encouragement to tell them, our capitalists friends, what is going on in our lives. It is a learned unprofessional tone that eases us into a sense that no one around here is anything like a hard-nosed capitalist, no one, we are reassured, is in any way trying to exploit us. They just want us all to connect together to make a better world. They project the friendly facilitator type image, playing down the money, power and influence.

and similarly, Kate Devlin:

Diane Coyle reviews Colin Mayer’s recent book about corporations and societyand one note stood out for me:

Another suggestion — industrial foundations run by trustees — is more appealing.

Nice to have a book ending with practical recommendations :)

It was also nice to come across some unexpected statistics on a shiny stats site (Statista) — in this case, R&D expenditure.