Weeknotes: technical debt and the fourth industrial revolution

Technical debt came up a couple of weeks ago, with an interesting article about how Wikimedia was addressing it. We also had a good chat about it on a Festival of Maintenance planning call, starting from a question of how big software systems maintenance issues might differ from open source software maintenance issues. John Grant contrasted 'traditional' software technical debt, and questions where, say, legacy IT systems are still in place where competitors have moved equivalent services to the cloud - "toxic IT." Inspired by questions from other Festival volunteers (many of whom are not into software) I wondered whether other engineering fields had a concept of technical debt.

It's a term I'd only ever encountered in software, and to some extent seemed to be linked to a sense of how code is special and different to other forms of engineering, because you can "always change it later," at least in theory. So it's easier to amass issues you ought to come back to, but can get away with not doing for now, in software than in other fields. Or is it?

I discussed this with engineers from other fields - process, manufacturing, mechanical, product design. Whilst these physical engineering forms are hard to change once instantiated, they still have a development phase when they are being designed and changes are therefore to digital designs or knowledge. So during this phase they are not perhaps so different from software. I had a vague hypothesis that one difference between software and other engineering might be that software specifications are looser, whereas in other fields you may have very concrete and unchangeable requirements.

There's some parallels between tech debt and risk reduction. Some of the engineers I talked to referred to innovation projects (a useful reminder that not all projects are innovation ones!) where the cost of changing a design increases through the development process. That means it's important to fix the right things down early on, so that you don't need expensive changes later. With software, at least in theory, the cost of change doesn't increase so dramatically over time, so it's easier to leave things until later as the cost of fixing things then is not hugely different. Another aspect is scale not always being linear. A design that works OK for a one-off manufactured item (perhaps made by hand) may not work when you move to the full production process; and code that works for one user or one machine may not work at scale.

Process engineers seem to have a concept not entirely unlike technical debt, but haven't had the term. (Some of them have it now, though!). They describe this related idea as something like failure to do an adequate lifetime cost analysis. They also describe cases where weak processes or sub-optimal steps are kept through a production change, to avoid risk from other things (such as a need for re-certification because of a change), or where one set of people make changes without regard to upstream/downstream impacts in a process. These feel a little different to me - with technical debt in the software case, you know you are adding work or cost later - it's not unexpected, it's an intentional choice.

I'd welcome thoughts on this from other engineers.

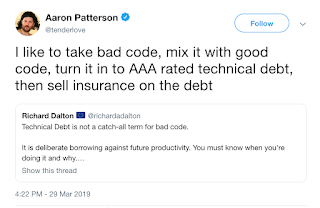

The interesting point about technical debt as a term is that it changes how we think about it. It is not quite the same as doing risk reduction through an innovation process or failing to account for the complete scale up process. It describes an intentional accrual of work for the future, which does not necessarily impact the near term at all (or, enables the near term to be more productive in some way). Whilst we acknowledge it with the term, and in some cases allocate time to deal with it down the line, it's not usually formally accounted for. Would capturing this in some way on a company balance sheet or dashboard help encourage more responsible and sustainable software engineering?

The wonderful folks over at The Maintainers have a set of critical articles out about the fourth industrial revolution. There's a lot of good stuff there - starting with the intro:

Via The Prepared, research into the effects of bans on plastic shopping bags:

Quite a few people have mentioned Rebecca Solnit's lovely article about the role of the hero vs collective action in fiction and reality.

Kelsey Piper tweets about Google's short-lived AI ethics board. The interesting bit though is about OpenAI (highlight mine) -

What does good 'digital' look like? It's this, not this.

Richard Pope writes about street lighting and draws out connections with the world of digital government. I particularly liked the idea of the complexity of local authorities and government structures being abstracted away in a drive for a one-size-fits-all design. The comments on private finance initiatives and the limitations on public infrastructure innovation these may bring are ones I've heard locally in Cambridge too.

|

| https://twitter.com/tenderlove/status/1111664818140794880 |

It's a term I'd only ever encountered in software, and to some extent seemed to be linked to a sense of how code is special and different to other forms of engineering, because you can "always change it later," at least in theory. So it's easier to amass issues you ought to come back to, but can get away with not doing for now, in software than in other fields. Or is it?

I discussed this with engineers from other fields - process, manufacturing, mechanical, product design. Whilst these physical engineering forms are hard to change once instantiated, they still have a development phase when they are being designed and changes are therefore to digital designs or knowledge. So during this phase they are not perhaps so different from software. I had a vague hypothesis that one difference between software and other engineering might be that software specifications are looser, whereas in other fields you may have very concrete and unchangeable requirements.

There's some parallels between tech debt and risk reduction. Some of the engineers I talked to referred to innovation projects (a useful reminder that not all projects are innovation ones!) where the cost of changing a design increases through the development process. That means it's important to fix the right things down early on, so that you don't need expensive changes later. With software, at least in theory, the cost of change doesn't increase so dramatically over time, so it's easier to leave things until later as the cost of fixing things then is not hugely different. Another aspect is scale not always being linear. A design that works OK for a one-off manufactured item (perhaps made by hand) may not work when you move to the full production process; and code that works for one user or one machine may not work at scale.

Process engineers seem to have a concept not entirely unlike technical debt, but haven't had the term. (Some of them have it now, though!). They describe this related idea as something like failure to do an adequate lifetime cost analysis. They also describe cases where weak processes or sub-optimal steps are kept through a production change, to avoid risk from other things (such as a need for re-certification because of a change), or where one set of people make changes without regard to upstream/downstream impacts in a process. These feel a little different to me - with technical debt in the software case, you know you are adding work or cost later - it's not unexpected, it's an intentional choice.

I'd welcome thoughts on this from other engineers.

The interesting point about technical debt as a term is that it changes how we think about it. It is not quite the same as doing risk reduction through an innovation process or failing to account for the complete scale up process. It describes an intentional accrual of work for the future, which does not necessarily impact the near term at all (or, enables the near term to be more productive in some way). Whilst we acknowledge it with the term, and in some cases allocate time to deal with it down the line, it's not usually formally accounted for. Would capturing this in some way on a company balance sheet or dashboard help encourage more responsible and sustainable software engineering?

The wonderful folks over at The Maintainers have a set of critical articles out about the fourth industrial revolution. There's a lot of good stuff there - starting with the intro:

The notion of a 4th IR, and the technological developments associated with it, continue to figure prominently in global discourses. No day passes without the appearance of an article or opinion piece on machine learning, the politics of algorithms, the power of social media, the effect of autonomous vehicles, the increasing automation of work, the volatility of cryptocurrency, and so on. Less common, though much needed and increasing, is thoughtful consideration, informed by research and expertise, about the socioeconomic impacts of these technologies. Relatively little is said about who finances these developments, who makes choices among them, and who ultimately benefits. There is a good deal at stake today in this 4th IR, whether or not it actually exists or will. Now is the critical time to start analyzing the 4th IR.I also enjoyed the piece contrasting the 4th Industrial Revolution with Industry 4.0, about climate and interactions between state and industry, and this wander through previous Industrial Revolutions, and why such stories are useful for whom (who remembers how the atomic age was to be the next revolution?)

Prediction should lead us to take actions that address a range of possibilities. Moreover, historical and social scientific studies have demonstrated for decades that technology involves both people and things. We sometimes speak of “sociotechnical systems.” Predictions, either optimistic or grim, often focus too much on things, and not enough on people and their relations. The ideology of the technological fix encourages us to think this way. Our inability or unwillingness to confront the social aspect of the future in as much detail as the technical is one big reason why predictions are often so wrong. Finally, because so much of prediction serves the predictors’ interests, and attempts to get us to buy things and ideas, we must be very cautious about whose interests a given prediction is serving.Highlight mine.

What’s remarkable about discussions for the 4th Industrial Revolution is that it allows good capitalists to put forward socialist policies without blinking. It’s amazing how much coverage the idea of Universal Basic Income (UBI), which guarantees unconditional payment to all citizens, has gotten in the last few years. ... The point is, however, that, if UBI policies make sense, they don’t only make sense in situations where 4th IR visions come to fruition. They make sense in our ordinary and often crummy reality.Oooh.

The simple fact is that most mainstream political parties are not offering any solutions or viable visions for escaping this profound basket of problems. It seems like one benefit of the 4th IR is that it can — though doesn’t necessarily — lead us to face them by suggesting that they will only grow worse. Whether we are speaking about multi-generational poverty or climate change, there are social (as opposed to merely technical) experiments we should try out now, rather than waiting for AI or robots to come and eat our lunch, or some magic bullet technology to come along and save our butts.Big companies continue to fascinate me - and I guess to repel me as I keep working with small organisations where I can grasp the whole and have a sense I am avoiding something I wouldn't like. So the corporate analysis of this article about the history of innovation and coordination across sectors was fascinating:

But 2nd IR companies also often grew through vertical integration, so that every piece of their operations, from raw materials to finished products, could be done in-house. Whether as a matter of diversification or vertical integration — this was a matter of debate — these companies often included units devoted to scientific research. The classic 2nd Industrial Revolution companies possessed giant corporate laboratories churning out discoveries. Many of those discoveries were given away, so that firms could claim to be operating for the public good and thereby legitimate their monopolies. A particularly notable example is the transistor, the building block of modern computers, which was invented at AT&T in 1947 and quickly shared with other companies to create what became the global semiconductor industry.The complex interconnectedness of our world of production also gets a mention:

Over time, other firms adopted that model, and shed more and more activities to outside vendors. ....Apple is probably the paradigmatic firm of this type; very little that’s made by Apple actually goes into an iPhone — instead, the company’s role is to coordinate a network of vendors who each contribute a piece to the whole.But how do organisations organise themselves for this?

The big question for 3rd Industrial Revolution firms is: how do you know what to do? In a 2nd IR company, information moves up and down a chain of command. Everyone is supposed to know what their unit should do to move the company forward. You know where the components of your products come from — you make them yourself. You don’t have that luxury in a 3rd IR company — everyone operates in a sea of other companies swimming in every direction. How can you ensure that you get the parts, the technologies, the knowledge that you need when you need them? How can you ensure that you and your customers and vendors are on the same trajectory?

The answer, I think, is something like a bat-signal. You know how those work, right? You can’t rely on the police — in-house security is too slow and too conventional and upper management doesn’t know what it’s doing, all classic 2nd Industrial Revolution problems. So you outsource protecting the city to a private contractor, Batman. But no one knows who Batman is, so you can’t contact him directly to tell him what you need. Instead, if there’s trouble, you make him aware of it by beaming a signal into the sky that everyone can see and react to. That’s the 3rd Industrial Revolution way — no one tells Batman what to do, but he can see what you need him to do.What's the bat-signal for corporates?

By the end of the ’80s, Moore’s Law was formally enshrined in industrial “roadmaps” which operate just like bat-signals. Everyone in the industry or wanting to be in the industry can look at the roadmap and know just where their competitors and vendors will be two, five, ten years from now, and therefore what they need to do to survive over that timeframe.

These companies still use bat-signals, but not the dry, technocratic roadmaps that 3rd IR companies employ. Instead, their leaders are constantly in public, beaming visions of the future into the sky so everyone knows what they want without them having to issue direct orders. Presumably they do issue orders within their companies, but the reason they project these bat-signals is that their desires are just as (probably more) likely to be met by external actors whom they can later buy out or form collaborations with.This is the Davos and Aspen circuit, TED talks and so on.

The bat-signal creates followers, and it creates people who are left behind. Now, some of these bat-signals are relatively benign... But other captains of the 4th Industrial Revolution are beaming up much more dubious signals. For instance, Peter Thiel’s penchant for seasteading looks to me like a ploy to destroy the idea of a public good — i.e., it promotes the aim of siphoning taxable wealth onto artificial islands populated by the rich and beautiful and leaving nation-states to starve.Moving on to one of the other articles, on the financialisation of innovation this century:

...I think we stand in the same place relative to the 4th Industrial Revolution as our forerunners did in the years around 1970 relative to the 3rd. Those were the years in which firms like Intel arose via a critique of the 2nd Industrial Revolution. But there were lots of other critiques around in those years as well... Today, the 4th IR is being put forward as a critique of the 3rd, but plenty of other critiques are available. Some are rapidly being appropriated by the Thiels, Musks, and Zuckerbergs of the world. But let’s try to ensure that the critiques which imagine a world the rest of us might actually want to live in have a chance.

I am convinced that there exists a direct line between the financialization of the American economy in the last third of the 20th century and the reckless rapidity with which new technologies have been introduced in the open market. The push to get to market first, the emphasis on speed, not to mention the sense of inevitability about the potential negative consequences of these technologies for well-being at so many levels, all relate to the overriding demand for new financial products offering high yields in a low-interest rate environment.So there's nothing new under the sun. However - there might be ways forward from history:

In previous eras, though the underlying technologies were different, the monetization of knowledge and the pressures to realize short-term returns to invention also distorted or negated positive features of emerging technologies, unnecessarily magnifying their negative consequences for society. This aspect of innovation, now disingenuously termed “unintended consequences,” goes back a long way.

The Venetian city-state, which attached a premium to attracting advanced technology nevertheless also routinely held formal hearings before granting its coveted 20-year patent monopolies to inventors. Venice went out of its way to reward inventors, foreign and domestic, but it was not naïve about unintended consequences. No doubt as a result of bitter experience, Venice anticipated and wanted to prepare for, what might be in store for the more vulnerable parts of its population.Thinking about the knowledge economy, and intellectual property:

As Catherine Fisk has shown in her book Working Knowledge, individual inventors have also suffered, at least since the early 19th century, as attempts to monetize technology have deprived them of the once inalienable rights to the fruits of their knowledge work.

When innovation was state funded, was there more consideration made of negative impacts on labour and society? Is the market prepared to deal with unforeseen drawbacks to technology deployment?

Are we now producing more technologies to meet derived demand, rather than actual tangible needs for goods and services?The article outlines a range of reasons to develop tech that are detached from direct demand. This all supports much activity:

Of course, the demand for investment products in technology rests on the assumption that actual products and services based on it will eventually reach a real market, but in the meantime the idea of the potential applications of the technology and the insatiable appetite for risky investments that can produce higher yields than more ordinary investments supports a profitable stream of earnings for underwriters and preferred early investors, not to mention journalists and consultants who focus on new technologies.

...AI, it seems, is something that many investors, both institutional and retail, are wanting to have represented in their portfolios. I recall a similar demand for robotics in the 1980s and how it led to many investments in bogus companies that hardly went farther than buying expensive office furniture. A similar discussion may have taken place in corporate board rooms in the early years of the 3rd Industrial Revolution around the importance of investing in research in electronics, but I cannot imagine anyone at that time saying they wanted to invest in Physics.Ha ha. Margaret Graham goes on to explore other forms of innovation support from the state:

Though much of what the U.S. Government contributed to technological innovation was invisible to the general public, it had been providing many innovation services that would not be so readily picked up by private industry or backed by private funding. Governments had provided early markets, paying uneconomic prices, and also operated what amounted to the first test-beds for many innovations.Twentieth century US innovation was slower - and that had advantages:

In both universities and industrial laboratories where much of the relevant research was actually performed, the scarcity of research personnel and the burden of educating a new generation of scientists slowed the momentum of research until a new generation took over. ... What is often forgotten is that the perceived delays had the beneficial effect that manufacturers, users and funders had time to adjust to the demands of the new technologies, to train for their special needs both for manufacturers and managers and to become acquainted with their economics.The conclusion, about the present era, is worrying --

All of these are reminiscent of the conditions that brought about the enclosing of the US innovation system in the 1930s.

Via The Prepared, research into the effects of bans on plastic shopping bags:

Plastic shopping bag bans result in increased sales of plastic garbage bags. "Using sales data from retail outlets, I found that bag bans in California reduced plastic carryout bag usage by 40 million pounds per year, but that this reduction was offset by a 12 million pound annual increase in trash bag sales. This meant that 30 percent of the plastic eliminated by the ban was coming back in the form of trash bags, which are thicker than typical plastic carryout bags."Tim Hayward's rant a few weeks ago stuck with me, and I was intrigued by this in-depth exploration of the world of pallets.

Quite a few people have mentioned Rebecca Solnit's lovely article about the role of the hero vs collective action in fiction and reality.

Kelsey Piper tweets about Google's short-lived AI ethics board. The interesting bit though is about OpenAI (highlight mine) -

OpenAI's press releases say weird-sounding stuff like "we are willing to merge with a value-aligned organization (even if it means reduced or zero payouts to investors) to avoid a competitive race which would make it hard to prioritize safety."

'weird-sounding' is a compliment here - if you're thinking seriously about being responsible in a emerging field, some of your resulting decisions will be really different from industry norms. If you're doing everything the same as everyone else, you haven't thought about it muchA look at content providers (or big tech companies) and submarine cable ownership (HT Steve Song).

What does good 'digital' look like? It's this, not this.

Richard Pope writes about street lighting and draws out connections with the world of digital government. I particularly liked the idea of the complexity of local authorities and government structures being abstracted away in a drive for a one-size-fits-all design. The comments on private finance initiatives and the limitations on public infrastructure innovation these may bring are ones I've heard locally in Cambridge too.